Stop Guessing: A/B Test Your Product Content to Sell More

You don’t need to overhaul your entire product catalog to boost sales. Sometimes, just swapping a headline or testing a new image layout is enough to shift results. The key is knowing what to change, and that’s where A/B testing comes in. It’s the most reliable way to figure out what content actually works, based on real buyer behavior. Whether you’re fine-tuning product pages on Amazon or managing a large multi-channel catalog, a smart testing strategy can quietly unlock better performance with every experiment.

In this article, we’ll dig into what A/B testing looks like in practice, what parts of your product content are worth testing, how to run clean, effective experiments, and how to turn your results into broader growth strategies.

What A/B Testing Actually Is

A/B testing means creating two different versions of a single piece of content and showing them to different groups of users at the same time. You track the performance of each version and compare outcomes – sales, clicks, conversion rate, or any other goal.

For example version A shows your original product title. Version B shows a revised title with added benefits or keywords. If version B results in more conversions, you have data to support using it across the board.

It’s not a vibe check or a hunch. It’s a structured way to learn what your customers actually respond to.

This isn’t the same as usability testing, where you observe how people interact with your product. And it’s not multivariate testing either, which compares many variables at once and usually requires a larger dataset.

Think of A/B testing as your go-to tool for validating content decisions in a focused, controlled way.

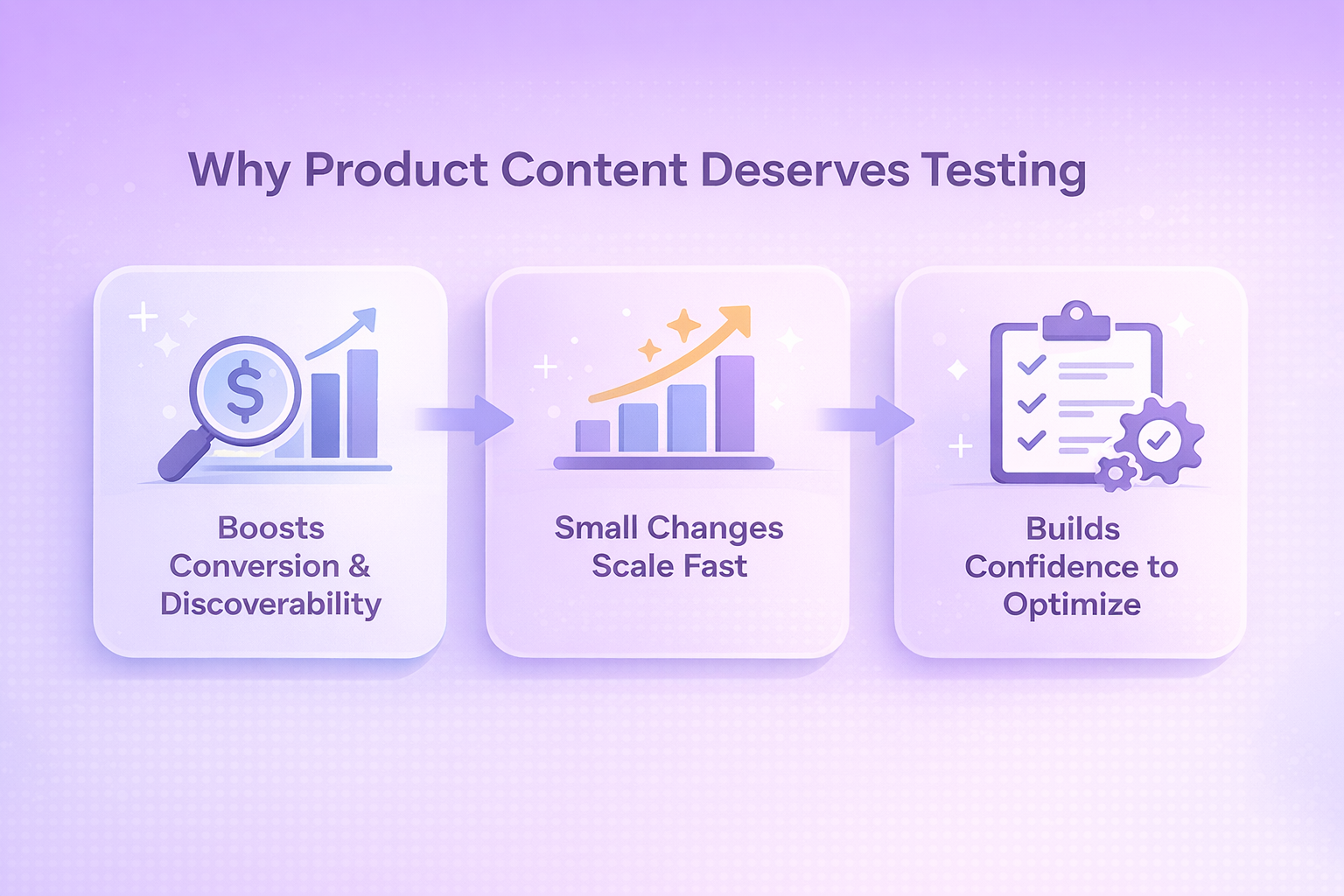

Why Product Content Deserves Testing

Designers and marketers are used to running experiments on ads, landing pages, and emails. But product content? That often gets left alone for too long. And that’s a missed opportunity.

Product detail pages play a huge role in the buying decision. Especially in marketplaces like Amazon or Shopify, where shoppers compare dozens of similar products in a single session. Your title, bullet points, images, and descriptions are doing the heavy lifting, and they’re worth testing just like any other part of the customer journey.

Here’s why it matters:

- Content affects both conversion and discoverability: Your title can help you rank better and appeal to customers faster.

- Small changes scale fast: If one variation boosts conversions by even 3%, that impact multiplies across hundreds or thousands of sessions.

- Testing gives you confidence: You stop relying on guesswork and start building a system for continuous optimization.

What You Can Test (And What You Should)

A/B testing doesn’t have to be complex. You can start small, with just one variable at a time. That’s actually the best way to isolate what’s working.

Here are some high-impact elements worth testing:

Product Titles

Try testing whether including your brand name makes a difference. You can also experiment with highlighting product benefits instead of just features. If your current title is overloaded with keywords, test a simpler version and see if it improves clarity and clicks.

Images

Run comparisons between lifestyle shots and clean product-only images. Test different angles, zoom levels, or even infographic-style layouts that add context. You might also explore how showing the product in use compares to a standard static view.

Bullet Points

Focus on what customers actually care about – size, materials, specific use cases. Test shorter, fact-based bullets against more detailed, benefit-driven ones. Reordering your points to lead with the strongest value can also change how buyers engage.

Descriptions

Try adding more storytelling or trust-building elements like guarantees or certifications. Break up long blocks of text with better formatting for easier scanning. And don’t be afraid to cut back – sometimes tighter, more focused copy performs better.

A+ Content (Amazon)

Experiment with different layout structures like side-by-side comparisons or video modules. You can also test different brand stories or upsell messages. Visuals matter too – charts, icons, and comparison tables might give your content the edge it needs.

How to Set Up a Solid Test Without Wasting Time

Running a test just to “see what happens” isn’t a strategy. If you want meaningful results, you need a plan.

1. Start With a Clear Hypothesis

Be specific. Instead of “Let’s see if a new image works,” try:

“We think lifestyle images will improve conversion rates compared to product-only shots.”

This helps define what you’re testing, why, and what success looks like.

2. Choose the Right Metric

You’re not always chasing more sales – sometimes the goal is more clicks, better engagement, or longer time on page.

Some useful A/B test metrics:

- Conversion rate.

- Units sold per visitor.

- Click-through rate (CTR).

- Add-to-cart rate.

- Revenue per visitor.

Pick the one that aligns with your hypothesis.

3. Focus on One Variable

Don’t change multiple things at once or you won’t know what made the difference. Keep it clean:

- One title change.

- One image swap.

- One rewritten bullet list.

4. Let It Run Long Enough

A common mistake is ending the test too early. Let the experiment run until you have enough data to reach statistical significance.

Amazon’s Manage Your Experiments tool can do this automatically by ending the test “to significance.” If you’re running it manually, use a testing calculator to check your sample size and duration.

5. Analyze, But Don’t Overread

Sometimes, a winner is obvious. Other times, results are flat. That’s still useful. If version B performed worse, at least you know what not to do.

And don’t just look at the final metric, check supporting data:

- Did engagement improve but conversions didn’t?

- Did the test attract more clicks but worse bounce rate?

Use the full picture to decide what to apply and what to test next.

Smart Testing Habits That Actually Work

If you want to get long-term value from A/B testing, build it into your workflow. Here’s how to make it a habit without it becoming a burden:

- Use a backlog of ideas: Keep a running list of test ideas, pain points, or content that feels stale.

- Tag tests by goal: Label them “conversion,” “engagement,” “SEO,” etc., so you’re not chasing random wins.

- Document every test: What you changed, what happened, and what you learned. Treat testing like a feedback loop.

- Recycle your winners: Apply what worked in one listing across other SKUs, where relevant.

- Involve the team: Designers, marketers, and product owners all benefit from these insights.

A Realistic Example: What Success Looks Like

Let’s say you’re selling a kitchen gadget on Amazon. The current title is keyword-stuffed, but a little hard to read:

“Premium Stainless Steel Garlic Press – Heavy Duty, Easy Clean, Ergonomic Handle – Garlic Crusher for Home & Professional Use”

You create a variation:

“Easy-Clean Garlic Press with Comfortable Grip – Durable Stainless Steel Crusher for Home Cooking”

After two weeks of testing, you may find that:

- Version B had a 12% higher click-through rate.

- Conversion increased by 6%.

- The new title ranked slightly better on branded keywords.

It’s not dramatic, but it’s real. And now you can apply the same title structure across your other listings. That’s how incremental wins stack up.

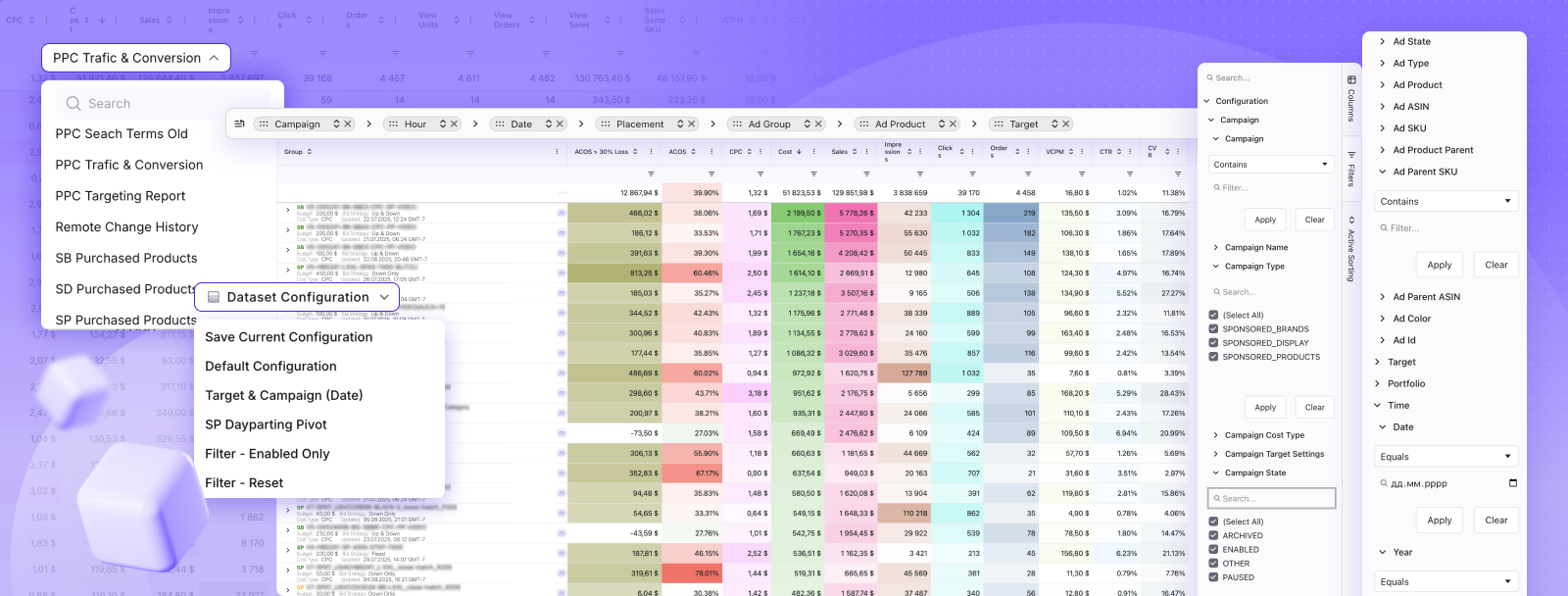

Where A/B Testing Meets Action: How We Help You Scale Smarter

At WisePPC, we believe testing is only the first step. The real value comes when you use those insights to drive action at scale. A/B testing shows you what works. We give you the tools to apply that learning across your entire advertising strategy, without the usual mess of spreadsheets or manual updates.

Let’s say your experiment reveals that listings with shorter titles convert better. Or maybe a new image layout drives higher click-through rates. With our platform, you don’t just update a single listing. You can use bulk actions to apply those changes across thousands of campaigns, ad groups, or targets in just a few clicks. And with real-time performance tracking, you’ll know immediately if those changes are moving the needle.

Because we’re built for marketplace sellers who need both insight and execution, our tools let you connect content decisions to your ad data, sales trends, and product-level performance. That means smarter testing, faster rollouts, and less time second-guessing what to optimize next.

Wrapping It Up: Test Less to Learn More

A/B testing isn’t about constantly changing everything or chasing perfection. It’s about being intentional with how you improve product content and backing your changes with real data.

It gives you clarity when things aren’t converting. It gives you proof when something does work. And it keeps your listings evolving as customer behavior shifts.

So next time you feel stuck or unsure about your content, don’t rewrite blindly. Just test it.

FAQ

1. Do I need a ton of traffic to run an A/B test on my product listing?

Not necessarily. You do need a minimum amount of traffic to get results that are statistically meaningful, but you don’t need thousands of visitors per day. Platforms like Amazon’s Manage Your Experiments will only let you test eligible ASINs that already meet that threshold, so you’re not flying blind. If you’re working with lower traffic, just expect tests to take a bit longer to reach significance.

2. What’s the biggest mistake people make with A/B testing?

Trying to test everything at once. When you change multiple elements – say, the title and images and bullet points – you won’t know which one actually made the difference. It’s tempting to combine changes, but real learning comes from isolating variables. One element at a time keeps your insights clean.

3. How long should I let a test run before deciding which version wins?

Let the data decide, not the clock. Some platforms will end a test automatically once it reaches statistical significance. If you’re doing it manually, you’ll want enough sessions and conversions to be confident in the outcome. Cutting tests short too early is like reading half a book and thinking you know the ending.

4. Can A/B testing hurt my conversion rate if one version underperforms?

Short term? Maybe a little. But remember, you’re only showing that version to half your audience. And the risk is worth the long-term gain. Once you identify the better-performing version, you’ll apply it everywhere and recover that dip quickly. Testing is about long-term performance, not avoiding small stumbles.

5. Should I test visuals or copy first?

Start with what you suspect has the biggest impact or what gets the most attention. If your images feel off-brand or outdated, that’s a solid place to begin. If you think your product title isn’t helping you stand out in search, test that instead. There’s no fixed order, but make sure you’re solving a real problem, not just changing things to stay busy.

6. What happens after I find a winning version?

You publish it, sure, but don’t stop there. Take what you learned and look for similar opportunities across your other listings or campaigns. Think of every test result as a blueprint, not a one-off. And if you’re using a tool like WisePPC, you can roll out those changes at scale with just a few clicks. That’s where the real efficiency kicks in.

Join the WisePPC Beta and Get Exclusive Access Benefits

WisePPC is now in beta — and we’re inviting a limited number of early users to join. As a beta tester, you'll get free access, lifetime perks, and a chance to help shape the product — from an Amazon Ads Verified Partner you can trust.

No credit card required

No credit card required

Free in beta and free extra month free after release

Free in beta and free extra month free after release

25% off for life — limited beta offer

25% off for life — limited beta offer

Access metrics Amazon Ads won’t show you

Access metrics Amazon Ads won’t show you

Be part of shaping the product with your feedback

Be part of shaping the product with your feedback